Introduction

I hate Proxmox hypervisor reboots. Any hypervisor reboot is a bad thing, because at the end of the day you’ll have VMs on the hypervisor and rebooting multiple servers is a stressful task.

Anyway, sometimes it needed, e.g. when you update to the latest version of Proxmox and get that snazzy new Kernel.

Here is the background to this reboot gone wrong:

TL;DR – the network card wasn’t set to auto

Well here is the irony. This server has been rebooted before and never gave networking issues. I guess the kernel upgrade from 5.x to 6.x broke something. The hardware is this:

- Supermicro X11DPL-i

- The network card is this according to the spec sheet: Intel® X722 + Marvell 88E1512

Upon reboot there was no link on the network card. Thus, ip a showed a network interface but no link. This made me panic because the data centre is far and I can’t get remote hands on quickly to check cables. But, why would the cable be out?

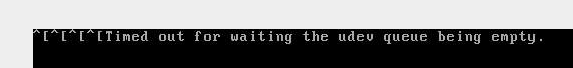

When your Proxmox server’s network interface is down, the startup takes much longer than usual and will be stuck here for a while before the login prompt appears:

“Timed out for waiting the udev queue being empty”. I imagine because the network stack is down all kinds of other things fail and / or have to time out first.

Moving on, I thought the actual problem might be the predictable network card names “issue” with modern Linux. After all this is mentioned in the PVE upgrade guide. So I went down this rabbit hole first and spent around 40 minutes there.

Along the way I had this panic too:

The Supermicro BIOS is hung on startup at “92”!

What I learnt here was to use IPMI to “gracefully switch off the machine” and then switch it back on. Along the way I also took a detour to the BIOS and found a setting for the NIC “Legacy” which I changed to EFI. This setting changed both NICs to EFI.

Carrying on with my diagnosis, I found dmesgthe most useful with dmesg | grep -i "eth"

Great was my relief to see actually the network card reports up! So now I had a very strong hunch something was wrong with the config.

Carrying on in the rabbit hole of predictable network card names, I did this:

mc -e /etc/default/grub GRUB_CMDLINE_LINUX="net.ifnames=0 biosdevname=0" update-grub reboot

Nope, no luck, just new (old) network names. Eventually I spotted manual in the config and found this command via chat:

ip link set dev <interface_name> up

It worked! Once I had the network up, I changed the config of the ethernet card and the bridge to the legacy eth0 name, added “auto”, and did service networking restart . Everything was back up!

Here was the original network card config:

cat /etc/network/interfaces.backup

auto lo

iface lo inet loopback

iface eno1 inet manual

auto vmbr0

iface vmbr0 inet static

address 41.72.151.242/29

gateway 41.72.151.241

bridge-ports eno1

bridge-stp off

bridge-fd 0

iface eno2 inet manual

Here is the rebooted network config:

cat /etc/network/interfaces

auto lo

iface lo inet loopback

#iface eno1 inet manual

iface eth0 inet auto

auto vmbr0

iface vmbr0 inet static

address 41.72.151.242/29

gateway 41.72.151.241

bridge-ports eth0

bridge-stp off

bridge-fd 0

iface eno2 inet manual

Start time: 1AM

End time: 3AM