Table of Contents

Finding Run Away ZFS Disks

Sometimes your ZFS drives on your disk runs away with you. Then you quickly need commands to see what ZFS disk is the busiest.

Try this:

ssh to NAS/server

iostat -zxc 5

That will show you busiest. Then when you have zd you can do this:

find /dev -ls | grep zd144

That will be actual VM ID

iostat flags:

-z: Suppresses lines where all statistics are zero (helps reduce noise).

-x: Shows extended statistics for each device (includes metrics like %util, await, svctm).

-c: Displays CPU usage statistics.

5: Repeats the output every 5 seconds.

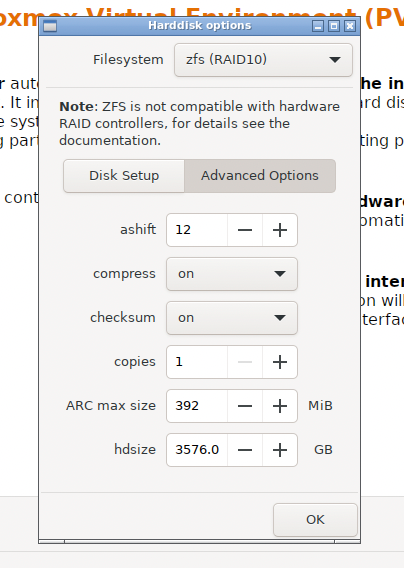

Defaults op a Proxmox ZFS RAID10 Array with 4 TB NVMe x 4 disks:

The Ultimate ZFS Speed Demon

Please note, output generated by AI, and then checked for accuracy.

- Mirrored vdevs (RAID10-style):

- Best IOPS.

- Easy rebuilds and minimal impact on performance during resilvering.

- Scales well with parallel reads/writes.

- SLOG (Separate Log Device):

- Reduces latency for synchronous writes (e.g. NFS, databases).

- Should be high-endurance, power-loss-protected, and preferably PCIe Gen4 NVMe.

What else should be done

1. L2ARC (Level 2 Adaptive Read Cache)

- When: Useful if your working set is larger than RAM.

- How: Add a second fast NVMe as L2ARC (not same device as SLOG).

- Note: L2ARC helps read-heavy workloads (e.g. media streaming, large dataset access).

2. Lots of RAM (ARC)

- ZFS loves RAM — aim for 1 GB RAM per 1 TB of storage minimum, or more if you can.

- ARC is king for read performance and metadata caching.

3. Compression (lz4)

- Enabled by default in most setups.

- Boosts performance and saves space with negligible CPU impact.

4. Ashift=12

- Ensures optimal alignment for 4K sector drives.

- Set at pool creation (zpool create -o ashift=12).

- Note: As per screenshot above, this is already set correctly in Proxmox.

5. Disable Dedup (Unless You Really Need It)

- Too heavy on RAM and CPU.

- Only enable if your dataset has very high duplication and you’ve benchmarked it.

6. Tune zfs_txg_timeout (Optional)

- For latency-sensitive systems, tuning this can help batch commits more efficiently.

7. Separate Metadata vdev (Metadev) [Optional, Advanced]

- For ultra-high-performance pools, especially with lots of small files.

- Use ultra-fast SSDs to hold only metadata.

Example

- 6 x 10TB drives → 3 mirrored vdevs (RAID10 style)

- 1 x 2TB PCIe 4.0 NVMe → SLOG (ZIL)

- 1 x 2TB PCIe 4.0 NVMe → L2ARC

- 128GB RAM (or more)

The ideal case (October 2025)

- 2U

- So that you can install 25 Gbit/s network cards

- So that you can install 3.5 inch drives if you wish to do so

- At least PCIe 4.0, at this point in time 5.0 won’t make a huge difference

- Redundant power supply

- Ideal to swap out NVMe and drives when needed. That probably means U2 for the NVMes